Mere Symbols

The Vatican published a book on AI: Encountering Artificial Intelligence: Ethical and Anthropological Investigations. It’s a well laid out book, with good insights, as one might expect from expert scholars and theologians.

What’s prompted the book, of course, is the meteoric rise of large language models (and probably diffusion-based generative audio-visual models like StableDiffusion), which have captured the public imagination. As the book says, ChatGPT grew to more than a 100 million users in its first week of publication. That’s… a lot of people, so it’s no wonder the ethical implications behind AI have captured the attention of many. AIs have already convinced couples to divorce and people to commit suicide. Clearly, someone needs to be talking about ethics at this point.

Naturally, this book approaches AI from a Catholic perspective, and in that sense, I think it achieves its goals. I’m going to share some of my notes and thoughts I have while going through the book, but before I do that I want to start at the beginning.

Not Your Father’s Computing

One of the first things the book does is define its terms. On page 18, the authors write:

A computation is “the transformation of sequences of symbols according to precise rules.” This set of precise rules or “recipe for solving a specific problem by manipulating symbols” is called an algorithm.

It then defines:

Machine learning (ML) is a computational process and method of analysis by which algorithms make inferences and predictions based on input data

They’re not wrong, but I’m not sure they’re right either. A lot of common discourse today talks about ‘algorithms’, these abstract ever-present entities of pure logic that run our lives. But what is an algorithm really? I think the authors basically have it correct. An algorithm is a set of precise rules to solve a specific problem by manipulating symbols. In this way, algorithms are very useful to us and predate computing. For example, multi-digit multiplication is a specific algorithm that we all know.

Now certainly, many machine learning problems, such as regressions and linear programming and some clustering methods, involve algorithms. However, I think the book fails to contend with the fact that these algorithms are not what people talk about when we mean AI. None of these methods (or their related algorithmic cousins, expert systems) have been able to achieve anything close to ChatGPT.

Algorithms like the ones above can be analyzed, broken down, and understood. Modern AI is based, as the book identifies, on the notion of deep neural networks. Without going into too much technical detail, essentially they take as input a large array of numbers and output another large array of numbers (thrilling, I know). What happens in between is pretty much a mystery.

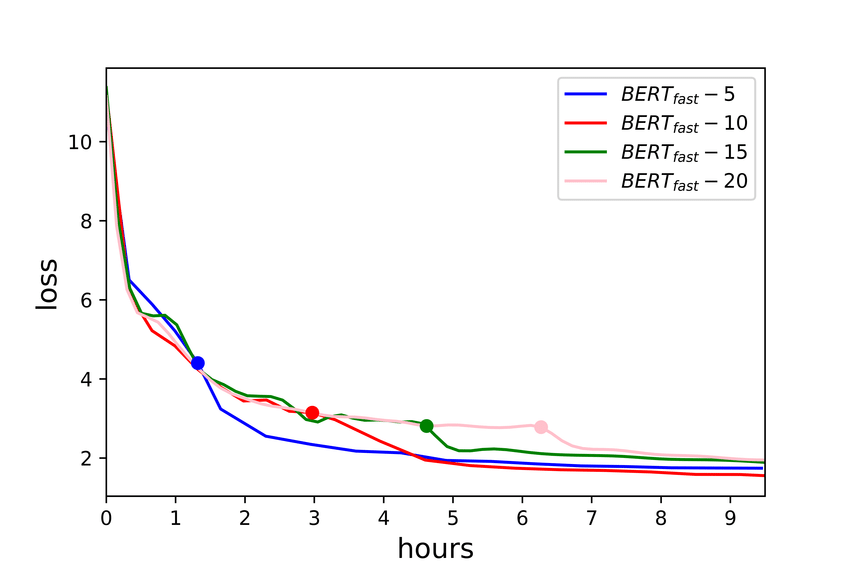

Now when I say a ‘mystery’ I don’t mean that we don’t know what’s going on. Obviously, we do. Given that this is what I earn my living off of, I certainly know all the operations taking place in minute detail (or at least my boss thinks I do!). What we don’t know is what any of the numbers mean. The numbers that govern the internal processing are derived not from any description of an algorithm, but from a learning process. Again, the learning process is similarly a mystery. While we know every step of how to run the process, we don’t know really understand it. For example, the graph below is the training loss of various BERT models. As loss decreases the model is perfoming better. Notice that at some points in training, the loss doesn’t move at all. We repeat the same training process, but nothing changes, until finally, the loss drops drastically in a short period of time, almost as if the model ‘discovered’ something. Yet, ask any machine learning researcher what this all means, and you’ll get blank stares or overconfident responses. No one knows. It’s not for lack of trying either; there have been many papers explaining various aspects 1 2, but there’s no widespread consensus 3.

Essentially, while we understand what to do here, we don’t understand deeply why it works. This is a lot different than long multiplication for example. It’s trivial to explain that algorithm. It relies on the distributed property of multiplication and the definition of place value. Every step is justifiable as to why it results in the correct answer. We’ve developed very good mechanisms 4 to mechanically to prove that following these steps will lead to the correct answer. Nothing similar exists for neural network models.

Sir, this is a Wendy’s

So if neural networks are not algorithms what are they? Well, again, I think we have to be careful with terms. Neural networks (or really, transformers, in the case of GPT) are algorithms. That means they are a sequence of well-defined steps to follow. However, the models that they run (meaning the weights learned during the learning process, combined with the algorithm to execute them) are not.

This may seem like a useless distinction but it’s really not. One common thing I’ve witnessed in the current debates on AI is that many computer scientists are more willing to entertain the idea that consciousness and other seemingly intractable problems are simply emergenty phenomenon, while others dismiss them outright as pure fantasy.

Personally, I believe that AI machines cannot have the same kind of agency as humans (I’ll get to this in a later post), but I do think I fall into the camp of computer scientists who are absolutely willing to entertain the notion that they might. The main source of this different approach I think comes down to one of the most shocking discoveries in computer science that any programmer or AI researcher would be familiar with.

Okay, I’m going to go back into deep computer science here, so everyone hold on tight.

Imagine a grid of cells. Each cell is either dead or alive. Each cell has eight neighbors. I’m going to give the following rules to update the cell:

- If a cell is dead, and the cell has three live neighbors, then make the cell alive in the next round (birth rule).

- If a cell is alive, and has 0 or 1 neighbors, then it dies. Similarly, if it is alive and has more than 4 neighbors, it dies (death rule).

- If a cell is alive and has 2 or 3 live neighbors, then it stays alive (survival rule).

Easy right? We can understand this completely. If I give you a piece of graph paper with some cells filled in to signal they’re alive and some blank to show they’re dead, you can apply these rules for as long as I ask, and give me the result. In fact, we can program computers to do this 5.

The truly awesome thing about these three rules, is that they fully encapsulate every possible computation that humankind can do. You can arrange alive or dead cells to calculate pi 6, make a computer 7, or even simulate itself 8. Every single program can be encoded into a pattern of live and dead cells.

This system is called Conway’s Game of Life. And here’s the thing, while we fully understand how to run Conway’s Game of Life (just follow the rules above), we actually can’t say a whole lot about it. There are useful patterns, like the ones above, and then there are some which we really can’t say anything about. They may do something, they may not. In particular, given a starting state, it’s impossible to tell in general whether or not the system will reach another state at some point in the future. It’s not only unknown whether they do, it’s unknowable and maybe even indescribable. This is called the halting problem..

We understand the meta-algorithm completely, but we cannot understand the behavior with just any input.

Okay, computer science over.

This is really similar to AI. We understand the rules of the network (the algorithm) completely. For GPT, it’s a deep transformer decoder-only model with multi-headed softmax-attention, an embedding step and a linear projection into the final domain (phew!). Given a description like this, one can easily write a program that does the computation step. However, this is not ChatGPT. ChatGPT is this plus the weights trained by OpenAI. And, when you do the computation (the algorithm) with the weights, you get something that seems much more advanced than a long sequence of additions, multiplications, exponentiations, and divisions. Like… a lot more advanced.

For reasons I won’t go into here, even saying things like GPT is a sequence of additions, multiplications, etc should not cause us to think the algorithm is simple. From mathematics, representation theory teaches us that vectors and matrices can represent arbitrary groups and group operators. In other words, AI models could be representing a system of rules much more complex than the 3-rule system of Conway’s Game of Life. They’re mechanistically capable of doing that.

Given what we know about the Game of Life, that this process could result in something more is not one I could dismiss outright. Simply understanding the steps does not necessarily give us any insight into the overall large problem. And I think this is why many computer scientists are fully bought into the idea of emergent complexity while others think it crazy. It’s simply something computer scientists contend with on a regular basis 9.

A bunch of rocks 10

Ultimately, there is an insiduous category mistake in simply discussing AI in terms of algorithms. There are algorithms underpinning AI (the model structure), but this algorithm is not what people talk about when they say AI. When someone talks about a computer do they mean the physical box or the physical box plus the software that runs it? It’s the same with AI. AI is the model plus the weights. The model is an algorithm. The model and weights are capable of doing something else, but it’s not clear what the weights used for GPT et al are doing.

Quick thought experiment to drive this point home: What happens when the weights to a GPT-like model are all zero? The output is… zero, no matter the inputs. Again, understanding the algorithm does not mean we know what the weights are doing.

I am not saying that this proves that AI models are anything special. I’m just pointing out that simply being an algorithm does not mean the system lacks transcendent complexity. I drew the analogy to Conway’s game of life because I think it’s important to realize that even simple algorithms can, when put together, produce results that defy the abilities of human cognition. Just because something is algorithmic does not make it characterizable. While Conway’s Game of Life is deterministic (it’s never subject to random chance), it is actually not determinable. Something being algorithmic does not make it understandable.

Mere symbols

This is an important point. On page 59, the authors say:

Definitions of reason and intelligence commonly applied to AI abandon this interiority in favor of a twofold reduction. First, rationality and understanding become the logical manipulation of symbolically represented information; and second, intelligence becomes efficacious problem-solving

Given the path laid out above, it’s apparent that there is nothing reductive about symbolic manipulation. As we’ve seen, manipulation of symbols, while simple, provably can lead to things which transcend human understanding. It’s truly the purview of God Himself.

Now this may all sound theoretical to you, but I assure you it’s not. In 2015, the halting problem’s intractability was used to show that the spectral gap problem (the problem of determining the energy difference between an atom’s ground state and its first excited state) was unsolvable in more than two dimensions 11. And yet, our three dimensional (maybe more?) universe chugs on as always in a seemingly very deterministic fashion.

Given all that, it’s not at all obvious why it would at all be bad if intelligence were to be ‘merely’ efficacious problem-solving through the manipulation of symbols. It’s enough to create infinite complexity. Is it really a stretch to imagine that – somewhere in all of that – we get something else?

How Do Transformers Learn Topic Structure: Towards a Mechanistic Understanding, Li, Yuchen and Li, Yuanzhi and Risteski, Andrej,Proceedings of the 40th International Conference on Machine Learning↩︎

Transformers Learn In-Context by Gradient Descent, Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan,Proceedings of Machine Learning Research↩︎

For example, Revisiting the Hypothesis: Do pretrained Transformers Learn In-Context by Gradient Descent? completely disagrees with the above.↩︎

See here for an online version↩︎

In general, the halting problem not only tells us we can’t understand whether a particular program will end or not but also tells us that most useful properties about programs are not determinable.↩︎

Cubitt, T., Perez-Garcia, D. & Wolf, M. Undecidability of the spectral gap. Nature 528, 207–211 (2015).↩︎